Microsoft’s new AI can simulate anyone’s voice with 3 seconds of audio

Ars Technica

On Thursday, Microsoft researchers introduced a new text-to-speech AI model referred to as VALL-E that can closely simulate a person’s voice when presented a a few-2nd audio sample. Once it learns a specific voice, VALL-E can synthesize audio of that man or woman declaring anything—and do it in a way that tries to protect the speaker’s psychological tone.

Its creators speculate that VALL-E could be utilized for large-good quality textual content-to-speech purposes, speech editing wherever a recording of a man or woman could be edited and improved from a text transcript (creating them say a thing they initially didn’t), and audio material creation when put together with other generative AI styles like GPT-3.

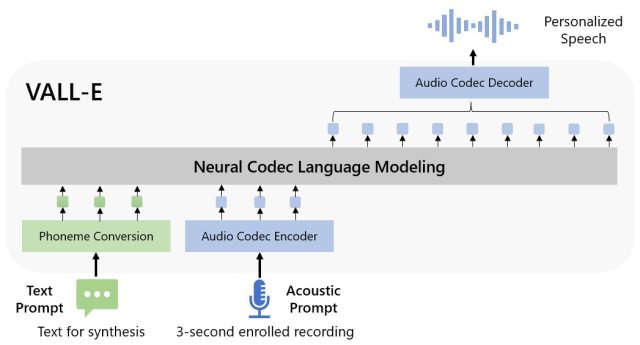

Microsoft phone calls VALL-E a “neural codec language product,” and it builds off of a know-how known as EnCodec, which Meta announced in Oct 2022. Compared with other textual content-to-speech approaches that typically synthesize speech by manipulating waveforms, VALL-E generates discrete audio codec codes from text and acoustic prompts. It fundamentally analyzes how a particular person sounds, breaks that info into discrete elements (termed “tokens”) many thanks to EnCodec, and uses schooling details to match what it “is familiar with” about how that voice would seem if it spoke other phrases exterior of the 3-2nd sample. Or, as Microsoft puts it in the VALL-E paper:

To synthesize personalized speech (e.g., zero-shot TTS), VALL-E generates the corresponding acoustic tokens conditioned on the acoustic tokens of the 3-2nd enrolled recording and the phoneme prompt, which constrain the speaker and written content data respectively. Lastly, the produced acoustic tokens are employed to synthesize the last waveform with the corresponding neural codec decoder.

Microsoft trained VALL-E’s speech-synthesis abilities on an audio library, assembled by Meta, referred to as LibriLight. It consists of 60,000 several hours of English language speech from much more than 7,000 speakers, mostly pulled from LibriVox public domain audiobooks. For VALL-E to create a good end result, the voice in the a few-next sample have to intently match a voice in the schooling facts.

On the VALL-E instance web site, Microsoft delivers dozens of audio illustrations of the AI design in motion. Among the samples, the “Speaker Prompt” is the three-2nd audio furnished to VALL-E that it ought to imitate. The “Floor Reality” is a pre-present recording of that very same speaker indicating a individual phrase for comparison purposes (form of like the “control” in the experiment). The “Baseline” is an case in point of synthesis provided by a common text-to-speech synthesis process, and the “VALL-E” sample is the output from the VALL-E design.

Microsoft

While making use of VALL-E to produce those benefits, the scientists only fed the 3-second “Speaker Prompt” sample and a text string (what they desired the voice to say) into VALL-E. So assess the “Ground Real truth” sample to the “VALL-E” sample. In some instances, the two samples are quite shut. Some VALL-E final results look computer-generated, but others could likely be mistaken for a human’s speech, which is the objective of the model.

In addition to preserving a speaker’s vocal timbre and psychological tone, VALL-E can also imitate the “acoustic ecosystem” of the sample audio. For case in point, if the sample arrived from a phone phone, the audio output will simulate the acoustic and frequency properties of a phone get in touch with in its synthesized output (which is a extravagant way of stating it will seem like a telephone call, far too). And Microsoft’s samples (in the “Synthesis of Range” section) demonstrate that VALL-E can crank out variations in voice tone by changing the random seed utilised in the generation approach.

Possibly owing to VALL-E’s means to likely gas mischief and deception, Microsoft has not delivered VALL-E code for some others to experiment with, so we could not examination VALL-E’s abilities. The scientists feel aware of the possible social harm that this technology could provide. For the paper’s conclusion, they write:

“Considering that VALL-E could synthesize speech that maintains speaker id, it may well carry probable dangers in misuse of the model, this sort of as spoofing voice identification or impersonating a certain speaker. To mitigate this sort of dangers, it is attainable to develop a detection design to discriminate no matter whether an audio clip was synthesized by VALL-E. We will also set Microsoft AI Principles into practice when even further building the versions.”